Gamifying My Life: How I Built an AI Game Master

I built elaborate knowledge systems with RAG and Neo4j. I tracked everything meticulously in Obsidian. But I still wasn't motivated to actually write. So I turned my life into an RPG with an AI game master—and it's working.

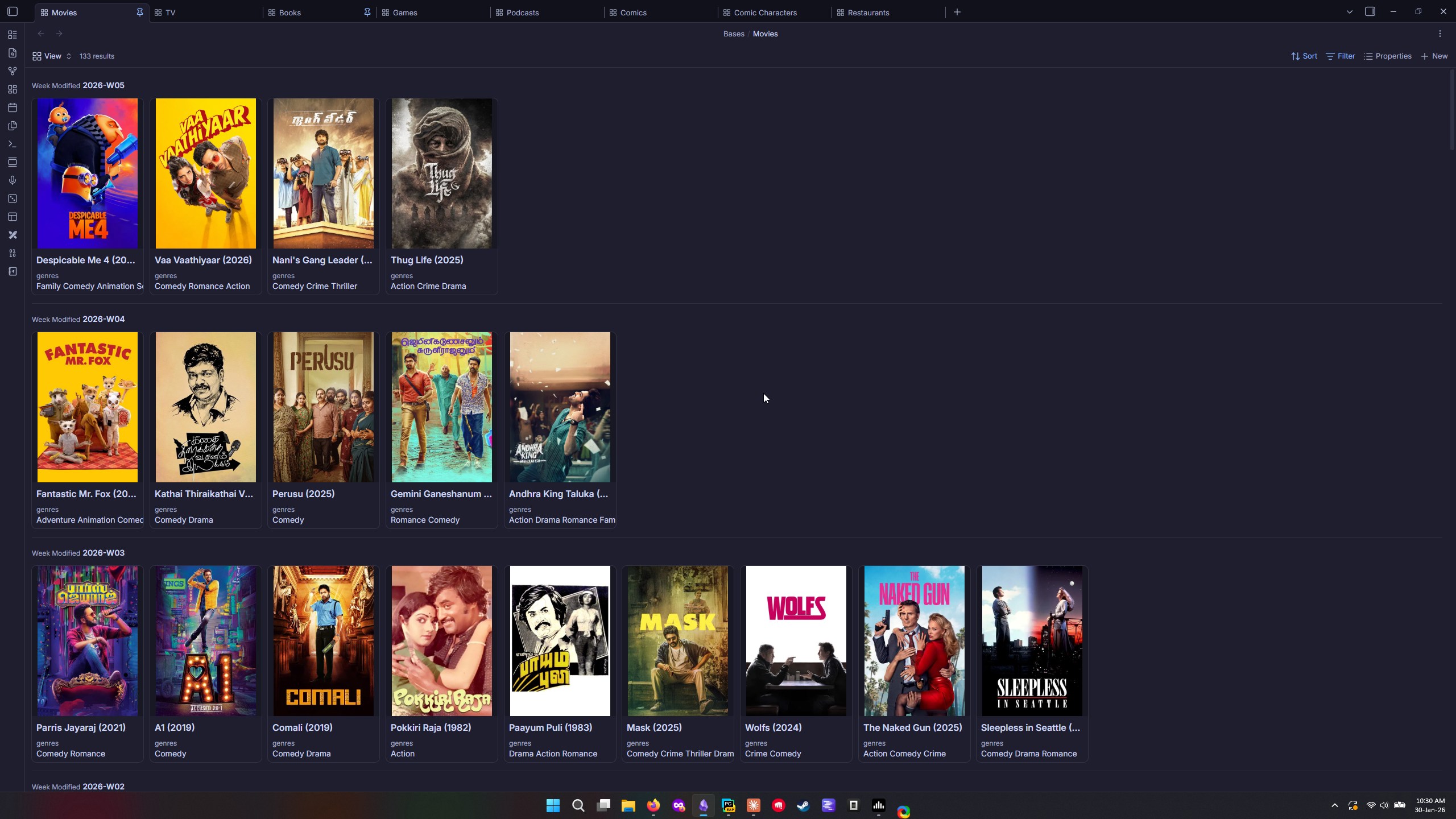

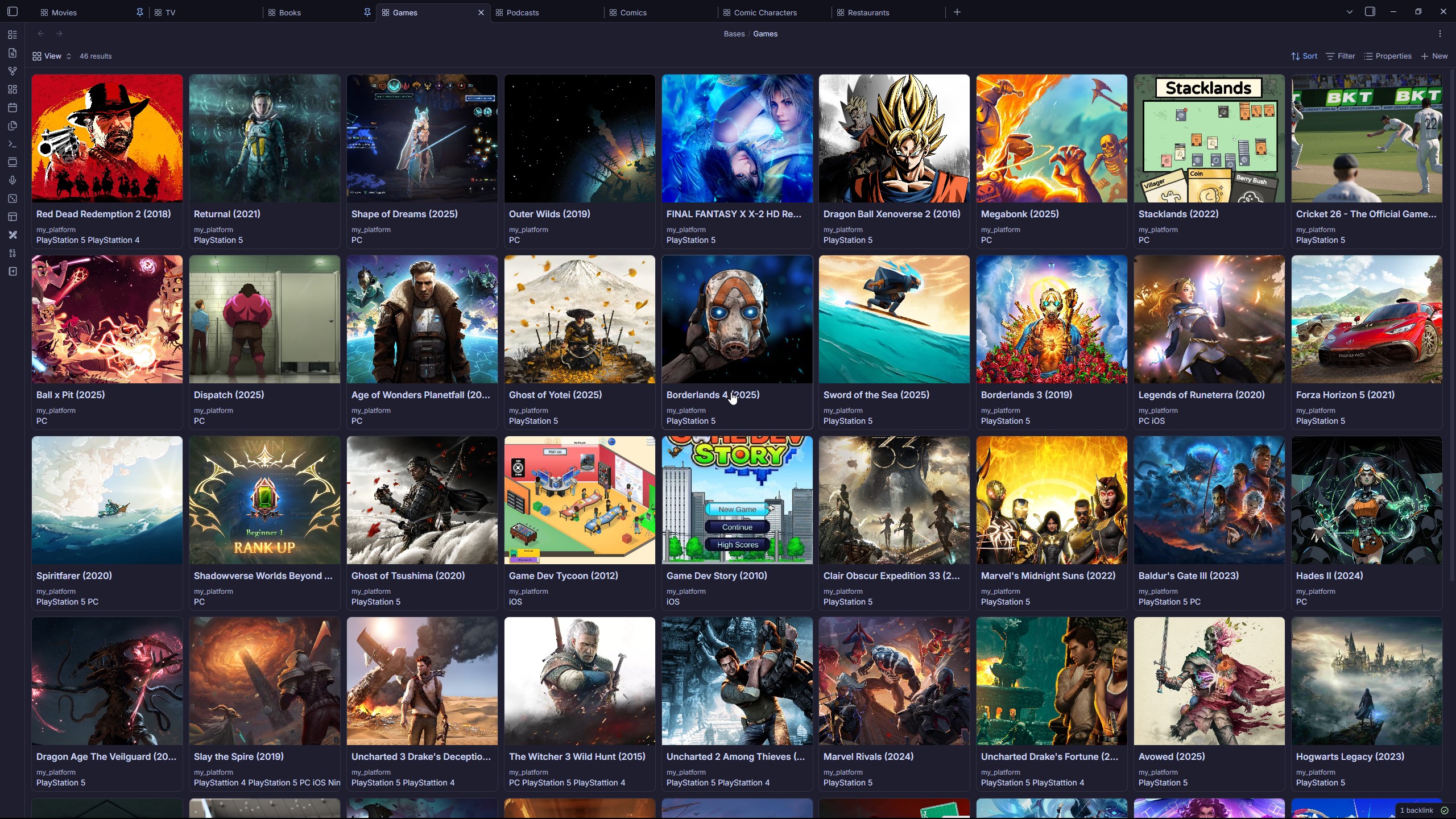

In my previous blog posts, I detailed how I architected a system using RAG, Neo4j, and MCP servers to gain insights from my Obsidian notes. I keep track of my daily activities, along with relevant metadata, as much as possible. The issue with this is that extracting meaningful actions just through logic and regex could only work to an extent.

For example, whenever I mentioned a movie wiki link in a daily note, I was marking it as watched for that day. This limited my writing. I couldn't reflect on a movie I watched, let's say, 3 weeks ago.

And for some actions like exercising, there is no metadata.

I needed something that could parse natural language into tangible actions. And of course AI is the solution, like for everything nowadays. Of course, I had to tackle my other problem: the lack of motivation to write down stuff. One thing led to another and I decided to gamify the whole process—my life.

Context is the Key

"Let's say I write in my Obsidian daily note - "I watched A Team today." It's a simple sentence, but AI wouldn't know if I'm referring to the movie or the TV show. Or when I write down "I worked on Project Morgana today," the AI wouldn't have enough information to categorize it as work or a personal project.

Which means I need to provide context whenever possible. Good thing is, I have context whenever possible as wiki links. The tricky part is each wiki link is its own markdown file with its own properties. And I need to give the AI a way to discover these files.

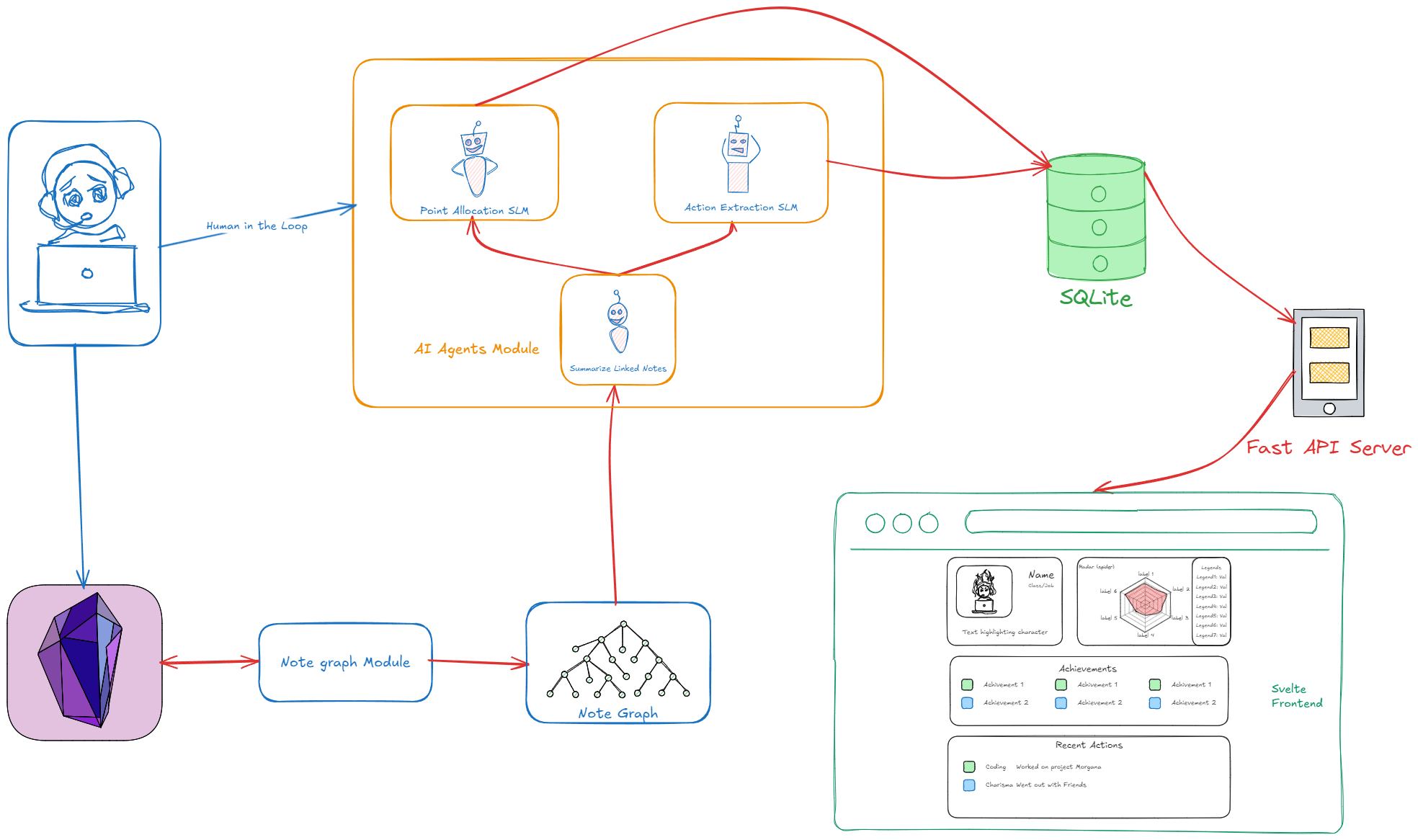

So I built a NoteGraph module. Given an entry point (a file), the NoteGraph will parse each wiki link, do a breadth-first traversal, and present the file locations of the notes linked. This way I could control the depth of the traversal as well. For our use case, a depth of 1 is more than sufficient.

I had to enhance this to work with links which point to very specific parts of a file instead of the whole file.

Listened to [[Good Areas Podcast#[ 8]Every Team’s BEST & WORST Case at the 2026 T20 World Cup| Jarrod and Deepak's thoughts on the upcoming T20WC]].

In that case, I don't need to get the entire Good Areas Podcast file for context. Rather the contents under the heading [ 8]Every Team’s BEST & WORST Case at the 2026 T20 World Cup. That's the only relevant information needed for processing.

The Summarizer, The Extractor, The Allocator

With the preprocessing done, I just had two things to do. I needed an AI agent to extract actions (this is later to be used for achievement tracking, yet to be implemented) and another agent for point allocation. For both cases, I couldn't just let the agents allocate points or categorize actions willy-nilly.

I had to come up with categories for both. I came up with 7 stats and 21 action categories. The issue then was the AI agents' output. I needed the output in a particular structure so I could put it in a SQLite database. Pydantic AI ensured this.

I had the architecture mostly done and I was hell-bent on using an SLM for these tasks instead of an LLM. So I used LM Studio and picked the latest Qwen model that my laptop could support. I fired up everything, and then hit run. I was expecting everything to work without a hitch.

If all my years in software engineering have taught me anything, it's that things rarely work as expected the first time. And so was the case here. The issue? Memory. My VRAM wasn't big enough.

After pondering, I decided that I would use an SLM to summarize the linked notes into smaller bite-sized pieces. The context and relationship to the daily notes were more important than the contents. This worked much better. The flow was complete and working.

But the quality of the outputs was not up to standard. Some models—Google's Gemma models were particularly notorious for this—would hallucinate tool calls that weren't even present in the system. Others would allocate points inconsistently or miscategorize actions entirely.

After hours of tweaking prompts and trying out different models of different sizes, I had my aha moment: I needed to clearly define what each stat represented and provide explicit guidelines for point allocation. It was about min-maxing the prompt itself—being precise about the rules of the game so the AI couldn't misinterpret them. Once I did that, things finally clicked.

Of course, it still isn't perfect. But it's there 80% of the way. The agent would still extract the same action twice, miscategorize an action, or award too many points. After all, SLMs are very limited. But I wanted something that works locally.

The solution? I gave myself veto power. I added a final review step where I can see what the agent extracted and the points it allocated. Accept or reject—that's my only choice. No tweaking individual stats, no gaming the numbers. Either the AI's judgment is reasonable, or it's not. I didn't want to give myself an easy way to award myself points. If I did, why use an AI agent in the first place?

Serving the Data and Future work

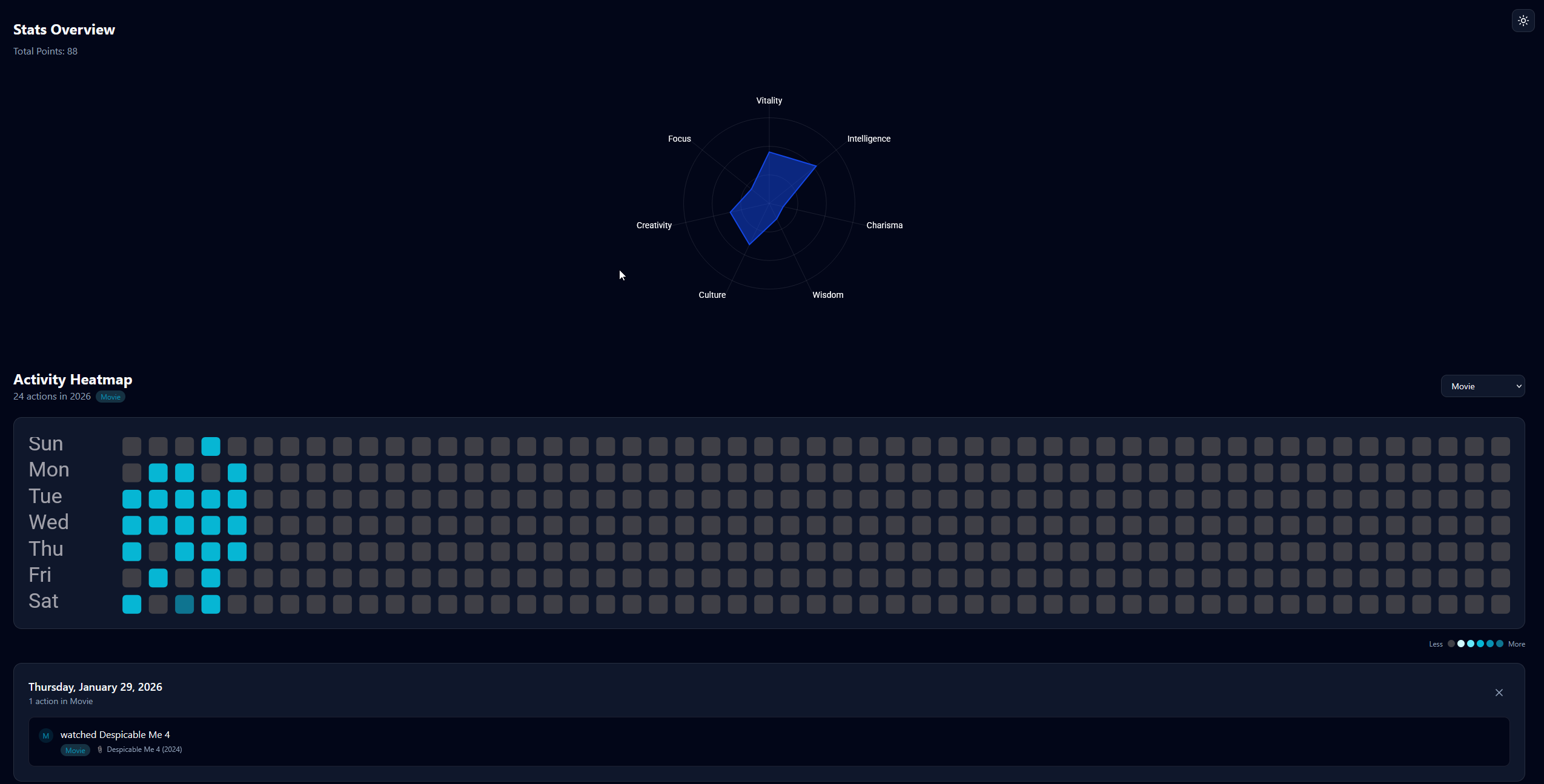

The bones were done. Now I just needed to dress it up. I served the points and actions from the SQLite database using REST endpoints (FastAPI). I consumed these endpoints in the frontend (Svelte) to present them in a radar chart showing my stat distribution and a heat map tracking daily activity patterns.

This was v1—functional, but bare-bones. There's still more I want to build. I've planned an achievements module where an LLM finds patterns in the actions and awards achievements dynamically. Imagine: 'Movie Marathon: Watched 5 films in a week'. The idea is to let the AI discover interesting patterns in my behavior rather than hard-coding every possible achievement.

Conclusion

Why do this? I want a system that works for me, and this is something that works for me. Ever since I got this project working, I have been writing more and understanding how I spend my time better. It's a funny thing how my brain works. Once I put in a tracker, a streak counter, I have been reading more.

Seeing the points as well, seeing where I'm lacking—I feel motivated to work on those areas. Wanting to socialize more to get points on Charisma, or wanting to hunker down and finish my unfinished personal projects for those Focus points. I have been seeing an improvement.

Is this sustainable long-term? I don't know yet. But for now, watching my stats grow is more motivating than any productivity framework I've tried. And that's enough.

If you'd like to follow my journey, please subscribe. If you have thoughts or suggestions, feel free to leave a comment or reach out to me.